The Secret Language of Machines: A Human Introduction to Embeddings in Generative AI

Every now and then, a seemingly simple idea reshapes how we think about machines and language. Embeddings are one of those ideas. They’re not new… word embeddings like Word2Vec hit the scene over a decade ago but in the world of Generative AI, embeddings have quietly become the unsung heroes of everything from chatbots to image generation to search engines that somehow get you.

They’re like the blood type of AI models, invisible most of the time, but absolutely essential for things to function without chaos.

These embeddings aren’t just math. They’re philosophy, too. They’re about what it means to represent meaning, similarity, context. In other words, they’re the way we get machines to understand, in a numerical way, things they were never built to feel.

Let’s take a walking tour of this strange, powerful, and surprisingly intuitive concept.

What Are Embeddings?

If you’ve ever wondered how ChatGPT knows that “cat” is more like “dog” than “carburetor,” embeddings are the answer.

At a very high level, embeddings are vector representations of data, a fancy way of saying we turn words (or images, code snippets, audio clips, even entire documents) into lists of numbers, and then do math on those numbers to figure out meaning, similarity, or context.

These aren’t arbitrary numbers. They live in a high-dimensional space (like hundreds or thousands of dimensions), where semantically similar things are closer together. So in this strange numerical universe:

- “Paris” - “France” + “Germany” ≈ “Berlin”

- “happy” might be close to “joyful,” and far from “miserable”

- “open source” and “GitHub” could be neighbors

This works not because of a dictionary, but because machine learning models learn from massive amounts of text, finding statistical patterns in how words and concepts appear together.

The result? Machines start to understand relationships. Not perfectly. Not emotionally. But practically.

See why we rely on Fastmail to power all our emails

The Power of Vectors: Why Numbers Matter

In GenAI, vectors are a way of placing data into a consistent format that computers can measure, compare, and manipulate.

Let’s take an example.

Suppose I feed a model the words: > “The cat sat on the mat.” And then: > “The dog lay on the rug.”

The words are different, but the structure and meaning are similar. A good embedding model will represent these two sentences with vectors that are close together. That’s how a generative model knows these are kind of saying the same thing, even if the words don’t match.

This is the core of semantic similarity, and it’s what makes so many things possible in GenAI: from smarter autocomplete suggestions to meaningful AI conversations to more relevant search results.

It’s also why embeddings are so powerful for retrieval-augmented generation (RAG).

Embeddings in Practice

So where do embeddings actually matter?

1. Chatbots That Remember and Understand

If you’re chatting with a customer support bot and it actually seems to “get” your question, embeddings are in play. Most good chatbots use embeddings to figure out what you’re really asking, then match your query to a pre-existing FAQ or knowledge base.

These embeddings let the bot map your vague, annoyed message like:

“Why is my order late again?!”

to a more neutral knowledge-base entry like: > “Shipping delays and tracking information.”

Under the hood, both are turned into vectors, and a similarity search pulls up the best match.

2. Search That Doesn’t Suck

Traditional search engines used to just do keyword matching. But try searching for something like:

“Best camera for low light concerts”

A dumb search engine looks for “best,” “camera,” “low,” “light,” “concerts.”

A smart one uses embeddings to understand the intent behind your query and returns relevant results even if the articles don’t use the exact same words. Semantic matching!

That’s vector search, powered by embeddings. Tools like Pinecone, Weaviate, and FAISS are making this approach scalable and real-time.

3. Multimodal Magic

Want to find a picture that “feels like freedom”? Or generate a caption for a complex image?

Embeddings don’t just work for text. In models like CLIP (from OpenAI), images and text are embedded into the same space. That means you can compare pictures and words directly, because both are just vectors now.

This unlocks stuff like:

- Searching for images with natural language

- Using image context to generate better captions

- Creating personalized art with text prompts (intro DALL·E)

4. Retrieval-Augmented Generation (RAG)

Here’s where embeddings really earn their accreditation.

Large language models like GPT-4 don’t have perfect memory. If you’re building a system that needs to answer questions using external knowledge, you don’t want to fine-tune the entire model every time your data changes.

Instead, you do this:

- Embed your documents into vector space

- When a user asks something, embed the query

- Use a vector similarity search to find relevant documents

- Feed those documents into the prompt context for the LLM to generate a response

This is Retrieval-Augmented Generation, and it’s the backbone of many enterprise GenAI products today.

How to Experiment With Embeddings (Without Losing Your Mind)

If you want to get hands-on, here are a few accessible ways to explore embeddings:

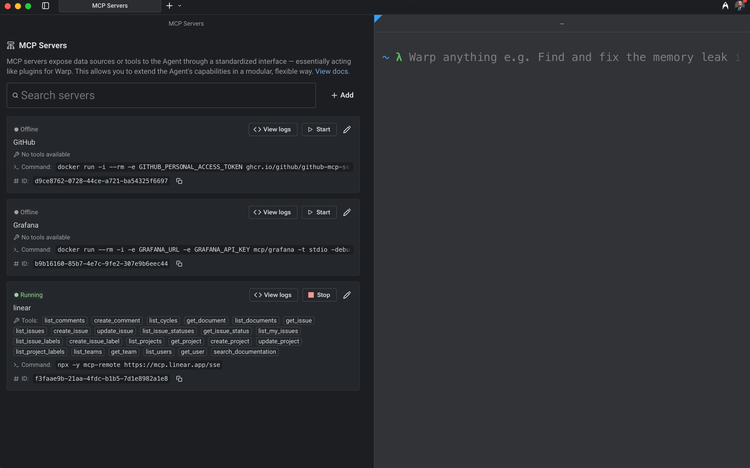

- OpenAI’s API: Try

text-embedding-3-small. It’s fast, affordable, and well-documented. - Hugging Face: Search for embedding models, try them in your browser, or download them for local use.

- LangChain: An increasingly popular framework for building GenAI apps. Has great support for RAG and embedding pipelines.

- Pinecone and Weaviate: Managed vector databases that make indexing and querying embeddings scalable.

- TensorBoard Projector: Visualize embeddings in 2D or 3D. See how clusters form. It’s strangely addictive.

Member discussion