Vector Databases Why Your Recommender System Just Got Smarter

You know that moment when your Spotify Discover playlist hits you with a song so on-point it feels like the algorithm knows you better than your therapist? Behind that magic is a quiet revolution in how we search and compare data: vectors. And now, a new class of databases is emerging to handle them at scale: vector databases.

As a TPM, especially working in AI/ML, search, anomaly detection, or fraud detection, you need to understand what they are, how they work, and why your engineering managers keep bringing up something called “FAISS” in meetings.

Let’s go on a quick journey.

From Rows and Columns to High-Dimensional Math

Traditional databases we are normally accustomed to are great for exact matches. Want to look up a user by email? No worries mate! SQL has your back. But when you try asking, “Show me products similar to this one” or “Find me users with similar behavior,” and suddenly you’re in the midst of a full-text search engine, an embedding model, something less certain and more fuzzy.

Enter Vector databases where instead of storing just strings, ints, and booleans, they store vectors, dense, high-dimensional representations of data. You could imagine a 768-dimensional float array to represent the “meaning” of a sentence or an image.

So instead of asking

“Does this equal that?”

you’re asking:

“How close are these two things in vector space?”

The answer comes via nearest neighbor search—and that’s the beating heart of a vector database.

The Library vs the Vibe Check

Traditional databases are like a library. You know the author and title? Great, go to Row 7, Shelf C.

Vector databases are like a vibe check. You say, “I’m looking for books that feel like this one,” and the system pulls recommendations based on meaning, not exact match. It’s a fuzzy, semantic, probabilistic world—and we love it here.

How It Works: Under the Hood

Here’s the architecture elevator pitch for your next design doc:

- Ingestion: You pass in raw data, text, images, audio—and an embedding model (e.g., OpenAI’s

text-embedding-3-small, or a local BERT model) converts it to vector form. - Storage: Vectors are stored in an optimized index. Popular options include:

- HNSW (Hierarchical Navigable Small World graphs): Great for fast search, even at scale.

- IVF (Inverted File Index): Often used in combination with product quantization.

- Similarity Search: When querying, you also convert the input to a vector, then perform Approximate Nearest Neighbor (ANN) search. Speed vs. accuracy is tunable.

- Metadata Filtering: Vector DBs often support hybrid queries. Want to find “images similar to this one, but only uploaded in the past 30 days”? You can.

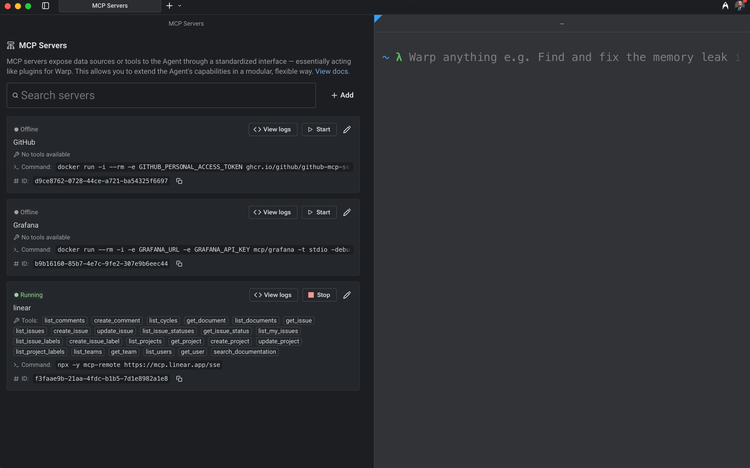

Popular vector DBs include:

Where Do Vector DBs Fit in System Design?

Here’s where TPMs need to listen up: Vector DBs don’t replace relational databases. Instead, they sit alongside them, handling similarity-based retrieval.

Member discussion