What are GANs, CNNs, SVMs & RNNs?

Walk into any AI conference, and you’ll hear conversations that sound like a game of Scrabble gone off the rails:

“We trained a CNN to preprocess for our GAN while benchmarking against an RNN. The SVM results weren’t bad either.”

To the uninitiated, it sounds like engineers are just flexing their ability to speak in acronyms. But behind those cryptic four-letter combos lie some of the most important tools in the machine learning toolkit.

So, let’s break down the Big Four:

- GAN: Generative Adversarial Network

- CNN: Convolutional Neural Network

- SVM: Support Vector Machine

- RNN: Recurrent Neural Network

This guide will not only explain what they are, but why they matter, where they’re used, and why they’re sometimes misunderstood, even by mere mortal TPMs such as ourselves, starting with GAN.

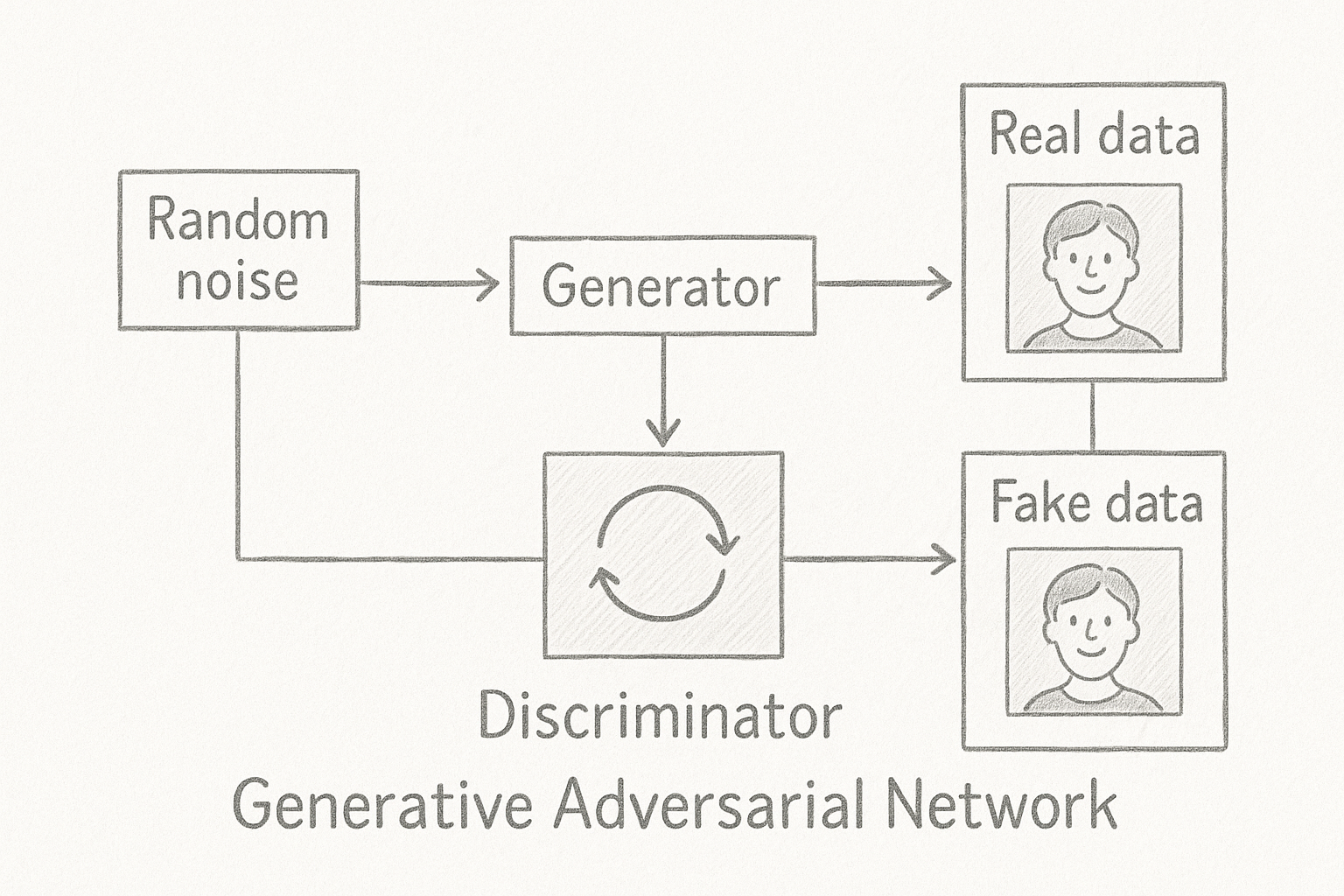

1. GAN – The Con Artist & The Cop

GAN or Generative Adversarial Network I like to think of like Jekyll and Hyde. A GAN is basically two neural networks, one that tries to fake data and one that tries to spot the fake. It’s like a forger trying to paint a fake Mona Lisa and an art critic trying to tell if it’s a fraud.

How it works:

- The Generator takes in random noise and spits out something fake (say, a picture of a face).

- The Discriminator tries to tell whether an image is real (from training data) or fake (from the generator).

- They train in a loop, improving at each step through adversarial training.

Real-world uses:

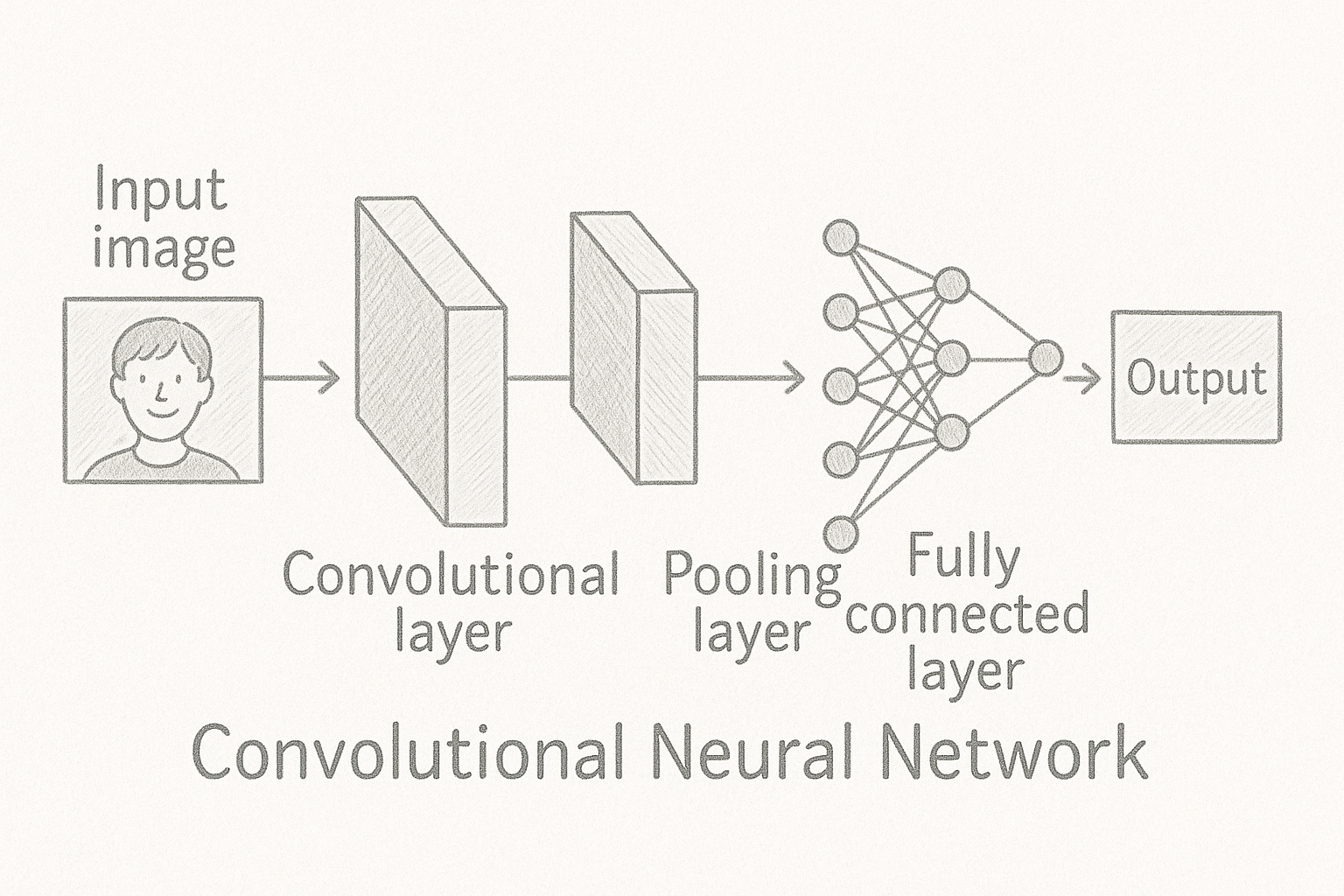

2. CNN – The Eyes of AI

CNN or Convolutional Neural Network, is your visual super hero. While GANs generate, CNNs recognize. They’re used for visual data images, videos, anything with pixels and patterns.

How it works:

- Convolutional layers detect features (edges, shapes, textures).

- Pooling layers reduce dimensions to make computation faster.

- Fully connected layers perform final classification or regression.

Real-world uses:

- Medical imaging (X-rays, MRIs)

- Facial recognition (Face ID, surveillance)

- Self-driving cars (object detection)

- ImageNet classification

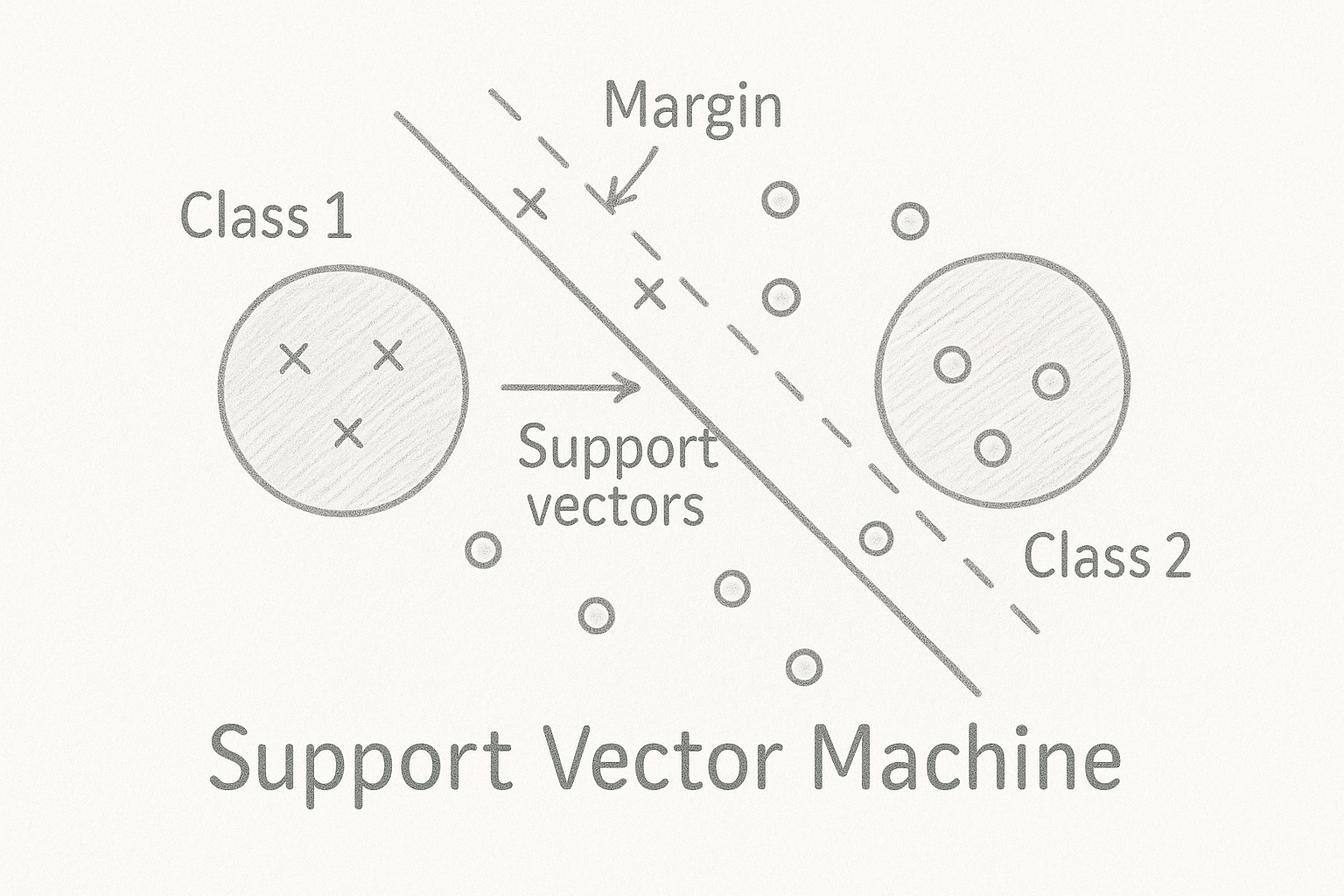

3. SVM – The Elegant Old-Schooler

SVM or Support Vector Machine is a mathematically sharp model that draws the cleanest line between categories.

How it works:

- Finds the maximum-margin hyperplane that separates two classes.

- Uses kernel tricks to deal with nonlinear data.

Types:

- Linear SVM

- Nonlinear SVM (with RBF, polynomial kernels)

Real-world uses:

- Spam detection

- Sentiment analysis

- Image classification

- Gene expression classification

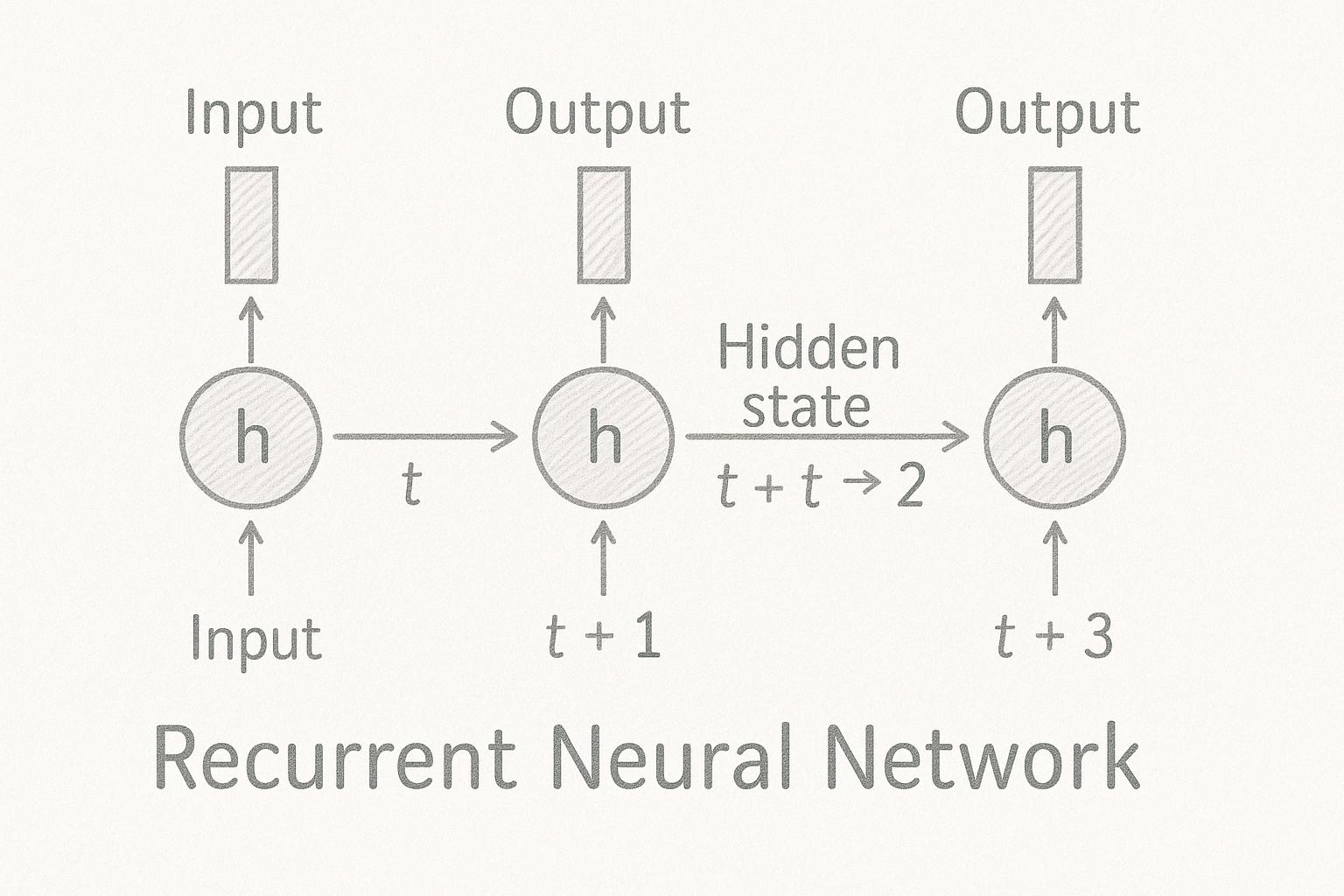

4. RNN – The Memory Keeper

RNN or Recurrent Neural Network is an AI that remembers what you just said, ideal for data that comes in sequences: time series, speech, text, etc.

How it works:

- Maintains a hidden state that carries context between inputs.

- Updates memory at each time step.

- Variants like LSTM and GRU help with long-term memory.

Real-world uses:

- Machine translation

- Chatbots

- Predictive text

- Stock market prediction

- Speech-to-text

TL;DR – When to Use What?

| Model | Best For | Strengths | Limitations |

|---|---|---|---|

| GAN | Generating realistic data (images, audio) | Creativity, realism | Hard to train, mode collapse |

| CNN | Image classification and object detection | Spatial understanding | Not great with sequences |

| SVM | Small structured datasets | High accuracy, interpretable | Doesn’t scale well to big data |

| RNN | Sequential data (text, time series) | Contextual memory | Struggles with long-term memory |

In Summation

Machine learning isn’t magic—it’s math and clever design. But knowing the right model for the job is half the battle.

You wouldn’t bring a knife to a gun fight, to quote a famous Aussie, and you shouldn’t use a CNN to analyze stock trends or an RNN to classify a cat photo. The key is to match the model to your data’s structure and your goal’s complexity.