What LLMs and AI Mean for TPMs (Beyond Prompt Engineering)

Since the explosion of ChatGPT, we no doubt know someone who started using it to write their status reports. But here’s the thing… For Technical Program Managers (TPMs), GenAI isn’t just a shiny utility but a tectonic shift in how software is built, shipped, and scaled. And if you think “prompt engineering” is the only skill you need to keep up with AI, buckle up. You’re in for a longer roadmap.

Here are five reasons why TPMs need to do more than just copy-and-paste from ChatGPT.

1. LLMs Are Changing the Developer Workflow

First off the bat, Large Language Models (LLMs) are not magic. They are statistical pattern matchers trained on internet-scale data. But they are changing the way developers write, debug, and even design code.

Code completion tools like GitHub Copilot are accelerating developer velocity. Which means what to your timelines? They’re going to shrink. Or at least, your stakeholders will expect them to.

As a TPM, this means:

- Re-evaluating sprint velocities and estimates.

- Understanding where AI tools actually help vs. where they hallucinate into disaster.

- Navigating the compliance, privacy, and security questions that come with AI-assisted code.

2. Product Development Just Got a Lot Stranger

LLMs are enabling capabilities that didn’t exist before. Suddenly, your product manager wants to build an AI chatbot. Your designer has ideas for generative UIs. Your legal team is asking if the LLM you’re using will leak user data. We had faux citizen development in the past but this is truly the entry of the aforementioned disciplined.

Your job? Bring sanity to the chaos.

You’ll need to:

- Run feasibility assessments with ML teams.

- Translate “we want to use AI” into actual technical requirements.

- Balance experimentation with production-grade reliability.

Oh, and you may have to explain why no, ChatGPT can’t “just summarize all user feedback into actionable JIRA tickets.”

3. LLMs Introduce New Kinds of Technical Debt

Models drift. Prompts break. APIs change. And suddenly, your entire AI-powered feature is down because OpenAI updated their model response format.

Unlike traditional systems, LLM-based features are probabilistic. They don’t always behave the same way. That unpredictability is now your problem. Just like human developers.

TPMs need to:

- Work with engineering to build robust evaluation and monitoring pipelines.

- Plan for model retraining, API versioning, and fallback strategies.

- Advocate for “explainability” as a feature, not an afterthought.

4. There’s a Whole New Set of Stakeholders

Remember when your stakeholder list was just product, engineering, and maybe QA? Welcome to the era of cross-functional whiplash:

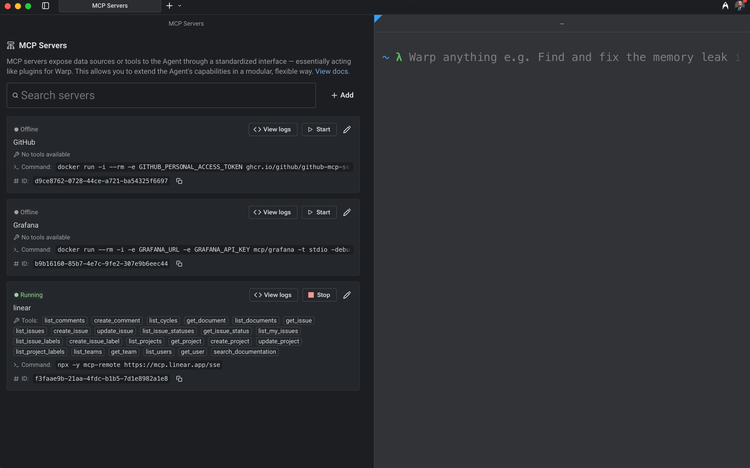

- ML/AI teams with their own roadmaps and rituals.

- Ethics and compliance teams trying to prevent lawsuits.

- Data science teams concerned about bias and fairness.

Suddenly, your job includes understanding model governance, data lineage, and responsible AI principles. No pressure.

5. You’re the Translator-in-Chief

AI projects tend to break down when teams can’t communicate. TPMs are uniquely positioned to bridge the gap between engineers, data scientists, designers, and execs.

That means:

- Learning just enough about transformers to know when someone’s bluffing.

- Translating business goals into AI system requirements.

- Knowing when “just fine-tune the model” is a six-week project, not a Slack message.

My Final Thoughts…

AI Isn’t Coming. It’s Here.

You don’t need to become an ML engineer. But you do need to get conversant in the risks, workflows, and vocabulary of modern AI. Because when the dust settles, the TPMs who can navigate this new landscape won’t just survive—they’ll be the ones leading the charge.

And no, you can’t delegate that to ChatGPT.

Hashtags:

#TPM #AI #LLM #ProductManagement #TechLeadership #ML #ProgramManagement #AIinTech #ResponsibleAI #FutureOfWork

Member discussion