Understanding the Kubernetes Gateway API: A TPM’s Field Guide

Spending time as a Technical Program Manager in the bullpen you would have come across the term Kubernetes Gateway. We touched on in a previous article on what Ingress is. Kubernetes Gateway API is the latest promise to replace ingress with a more extensive and expressive traffic routing.

In our roles we care more so about scalability and velocity than the nitty gritty of HTTP routing. In this article we are going to take a birds-eye view of what this API is, just enough to spark curiosity in a TPM’s mind.

But First on Ingress

Ingress was Kubernetes’ first attempt at standardizing north-south traffic routing (i.e., traffic coming into your cluster) which worked by giving you a single resource (‘Ingress’) to define how traffic is routed throughout your services.

The problem with Ingress is that it was overly simplistic. Want to do TLS termination, traffic splitting, or path-based routing with precision? Too bad. Each ingress controller (Nginx, Istio, Traefik, etc.) interpreted the spec slightly differently—or required their own custom annotations to make things work.

What Is the Gateway API?

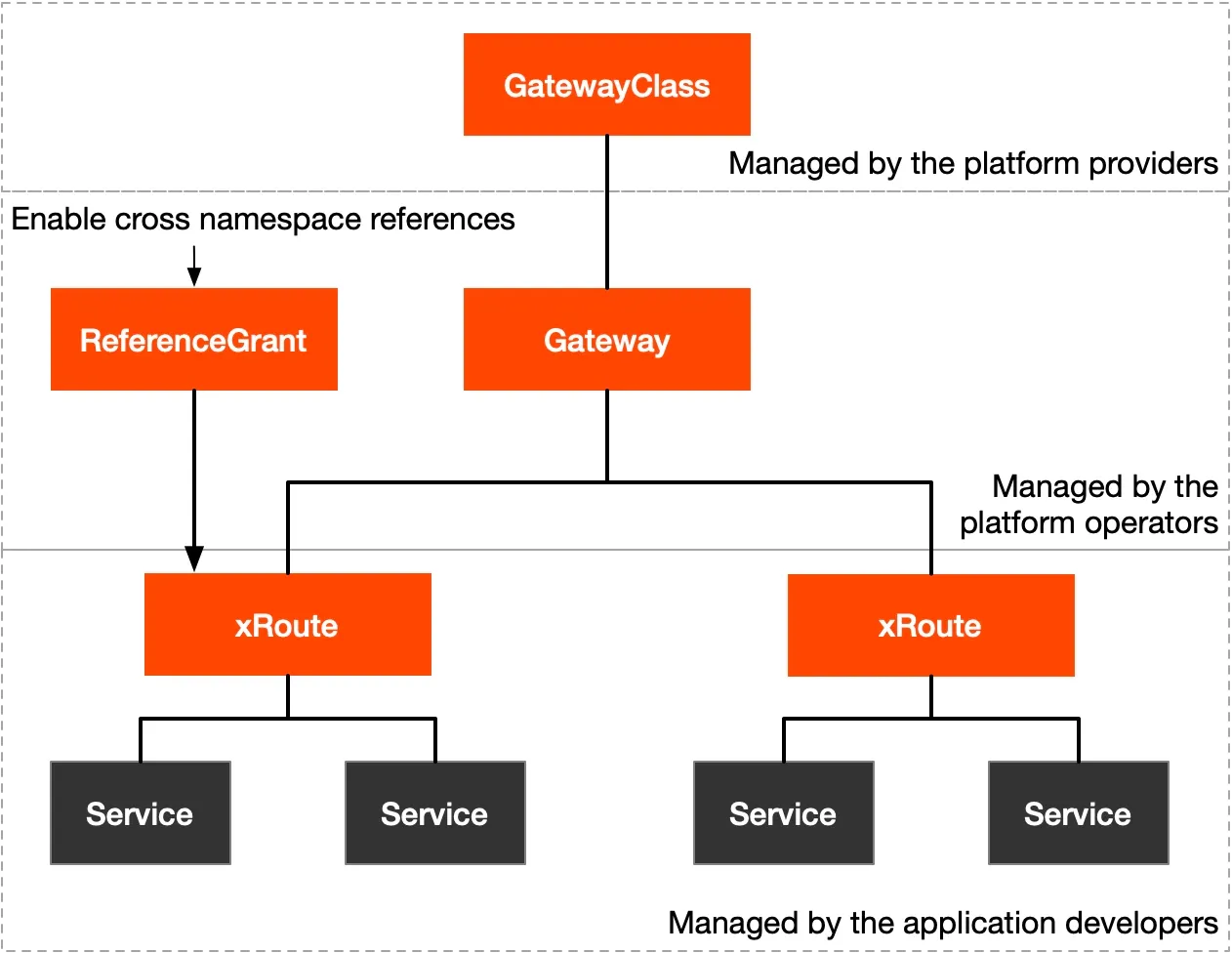

The Gateway API is a more expressive, extensible, and role-oriented model for routing traffic into (and within) your Kubernetes cluster. Instead of a single Ingress object, you now have a few key resources:

GatewayClass: Defines the kind of gateway you want (e.g., an NGINX gateway, a cloud provider’s load balancer, etc.)Gateway: Represents the actual instance of a gateway—think of it as a virtual load balancerHTTPRoute,TCPRoute, etc.: Define how traffic should be routed, at a much more granular level than Ingress ever could

Tetrate explains it as:

Role-oriented: Since Kubernetes infrastructure is typically a shared resource, multiple people with different roles and responsibilities must jointly participate in various aspects of the configuration and management of those resources. The Gateway API seeks to strike a balance between distributed flexibility and centralized control, allowing shared infrastructure to be used effectively by multiple, potentially non-coordinated teams. Expressive: As an advancement over Kubernetes Ingress, the Gateway API is meant to provide built-in core capabilities, such as header-based matching, traffic weighting, and other features that were previously only available through custom annotations that were not portable across implementations.

This decouples concerns:

- Platform teams can define and manage

GatewayClasses - Infrastructure teams can provision and operate

Gateways - Application teams can own their own

Routes

“The Gateway API gives us the flexibility to support multiple teams with different needs while maintaining central control over the critical entry points.” — Kelsey Hightower (probably)

Why We Should Care

You’re not configuring TLS certs yourself (I hope), but here’s why Gateway API should be on your radar:

1. Clearer ownership boundaries

Traditional Ingress setups blur the lines between app and infra teams. Gateway API introduces a cleaner contract. This means fewer cross-team fire drills when something breaks—and clearer RACI charts (if you’re into that sort of thing).

2. Better scalability across teams and clusters

As organizations grow, so do their routing needs. With Gateway API, you can scale routing logic per team or per environment without stepping on each other’s toes. It’s infrastructure that respects Conway’s Law.

3. Multi-tenancy done right

If you’re running a platform used by internal product teams or business units, Gateway API makes it easier to offer routing-as-a-service. Teams define their own routes; your platform ensures isolation and policy enforcement.

4. Future-proofing your platform

Gateway API is rapidly becoming the new standard. It has the backing of SIG-NETWORK and most major service mesh and ingress vendors. Even Istio and Linkerd are getting in on the act. Migrating now means less tech debt later.

5. Policy and Security are first-class citizens

Want to enforce TLS across the board? Control which routes can expose which paths? Gateway API gives you built-in support for policies and filters, without duct-taping things together.

Whether it’s roads, power, data centers, or Kubernetes clusters, infrastructure is built to be shared. However, shared infrastructure raises a common challenge - how to provide flexibility to users of the infrastructure while maintaining control by owners of the infrastructure?

Gateway API accomplishes this through a role-oriented design for Kubernetes service networking that strikes a balance between distributed flexibility and centralized control. It allows shared network infrastructure (hardware load balancers, cloud networking, cluster-hosted proxies etc) to be used by many different and non-coordinating teams, all bound by the policies and constraints set by cluster operators.

Gateway API in the Wild

It’s already being used by teams at Google, AWS, and large-scale SaaS companies that got tired of hacking around the Ingress spec.

If you’re running on GKE, for example, you can already provision a Gateway backed by Google Cloud Load Balancer. On AWS? Look into AWS VPC Lattice or the AWS Gateway Controller for Kubernetes.

And if your team is already using service meshes like Istio or Kuma, good news: Gateway API works well with those too, especially for ingress and east-west traffic routing.

Risks, Gotchas, and the Road Ahead

Gateway API is still evolving. Not all controllers support every feature. Some CRDs are still in beta. And like any powerful tool, it can be over-engineered into oblivion if you’re not careful.

But it’s maturing fast. And more importantly, it’s built on lessons learned from years of Ingress pain.

If you’re planning a migration, treat it like any strategic platform initiative:

- Start with a POC

- Define clear ownership boundaries

- Align infra and app teams early

- Track which controllers support the features you need (check gateway-api.sigs.k8s.io)

In Conclusion

The Kubernetes Gateway API isn’t just a new toy for cluster nerds. It’s a smarter, cleaner way to manage application traffic—and a step toward making platform engineering more sustainable at scale.

For TPMs, understanding this shift means you can help your teams plan migrations, reduce friction, and avoid yet another Slack thread full of angry emojis and DNS horror stories.

So the next time someone says “We’re thinking of moving to Gateway API,” you’ll know a bit more about why it matters and maybe even ask the smart questions before the engineers do.

Further Reading:

- Official Gateway API Docs

- Kubernetes Blog: Gateway API - Evolution of Kubernetes Networking

- Istio Gateway API support

#SuggestedHashtags: #Kubernetes #GatewayAPI #CloudNative #PlatformEngineering #DevOps #TPM #K8sNetworking

Member discussion